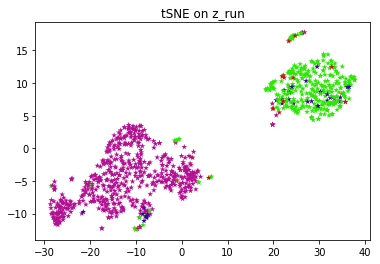

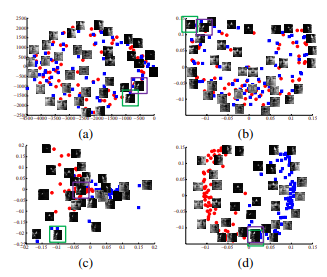

ClusterFit follows two steps. Low-Rank Tensor Completion by Approximating the Tensor Average Rank. The number of moving pieces are in general good indicator. Assessment of computational methods for the analysis of single-cell ATAC-seq data. It also worked well on a bunch of parameter settings and a bunch of different architectures. Here, we illustrate the applicability of the scConsensus workflow by integrating cluster results from the widely used Seurat package[6] and Scran [12], with those from the supervised methods RCA[4] and SingleR[13]. Lun AT, McCarthy DJ, Marioni JC. And recently, weve also been working on video and audio so basically saying a video and its corresponding audio are related samples and video and audio from a different video are basically unrelated samples. In summary, despite the obvious importance of cell type identification in scRNA-seq data analysis, the single-cell community has yet to converge on one cell typing methodology[3]. \(\text{loss}(U, U_{obs})\) is the cost function associated with the labels (see details below). Due to this, the number of classes in dataset doesn't have a bearing on its execution speed. $$\gdef \vytilde {\violet{\tilde{\vect{y}}}} $$ And of course, now you can have fairly good performance by methods like SimCLR or so. Each new prediction or classification made, the algorithm has to again find the nearest neighbors to that sample in order to call a vote for it. The supervised log ratio method is implemented in an R package, which is publicly available at \url {https://github.com/drjingma/slr}. WebImplementation of a Semi-supervised clustering algorithm described in the paper Semi-Supervised Clustering by Seeding, Basu, Sugato; Banerjee, Arindam and Mooney, And similarly, the performance to is higher for PIRL than Clustering, which in turn has higher performance than pretext tasks. You could use a variant of batch norm for example, group norm for video learning task, as it doesnt depend on the batch size, Ans: Actually, both could be used. Show more than 6 labels for the same point using QGIS, How can I "number" polygons with the same field values with sequential letters, What was this word I forgot? From Fig. Be robust to nuisance factors Invariance. S11). Finally, use $N_{cf}$ for all downstream tasks. 5. What are noisy samples in Scikit's DBSCAN clustering algorithm? $$\gdef \cz {\orange{z}} $$ Thanks for contributing an answer to Stack Overflow! Test and analyze the results of the clustering code. A unique feature of supervised classification algorithms are their decision boundaries, or more generally, their n-dimensional decision surface: a threshold or region where if superseded, will result in your sample being assigned that class. This process can be seamlessly applied in an iterative fashion to combine more than two clustering results. Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. In general softer distributions are very useful in pre-training methods. Chemometr Intell Lab Syst. $$\gdef \vztilde {\green{\tilde{\vect{z}}}} $$ Aside from this strong dependence on reference data, another general observation made was that the accuracy of cell type assignments decreases with an increasing number of cells and an increased pairwise similarity between them. Further, in 4 out of 5 datasets, we observed a greater performance improvement when one supervised and one unsupervised method were combined, as compared to when two supervised or two unsupervised methods were combined (Fig.3). The idea is pretty simple: Saturation with model size and data size. Due to the diverse merits and demerits of the numerous clustering approaches, this is unlikely to happen in the near future. In the pretraining stage, neural networks are trained to perform a self-supervised pretext task and obtain feature embeddings of a pair of input fibers (point clouds), followed by k-means clustering (Likas et al., 2003) to obtain initial Whereas what is designed or what is expected of these representations is that they are invariant to these things that it should be able to recognize a cat, no matter whether the cat is upright or that the cat is say, bent towards like by 90 degrees. You could even use a higher learning rate and you could also use for other downstream tasks. Figure5 shows the visualization of the various clustering results using the FACS labels, Seurat, RCA and scConsensus. The F1-score for each cell type t is defined as the harmonic mean of precision (Pre(t)) and recall (Rec(t)) computed for cell type t. In other words. It performs classification and regression tasks. $$\gdef \vtheta {\vect{\theta }} $$ Implementation of a Semi-supervised clustering algorithm described in the paper Sugato Basu, Arindam Banerjee, and R. Mooney. Question: Why use distillation method to compare. But its not so clear how to define the relatedness and unrelatedness in this case of self-supervised learning. GitHub Gist: instantly share code, notes, and snippets. Nat Methods. You signed in with another tab or window. The scConsensus pipeline is depicted in Fig.1. SciKit-Learn's K-Nearest Neighbours only supports numeric features, so you'll have to do whatever has to be done to get your data into that format before proceeding. 1) A randomly initialized model is trained with self-supervision of pretext tasks (i.e. $$\gdef \vgrey #1 {\textcolor{d9d9d9}{#1}} $$ In this paper, we propose a novel and principled learning formulation that addresses these issues. How do we get a simple self-supervised model working? PIRL was evaluated on In-the-wild Flickr images from the YFCC data set. Here, a TP is defined as correct cell type assignment, a FP refers to a mislabelling of a cell as being cell type t and a FN is a cell whose true identity is t according to the FACS data but the cell was labelled differently. The Normalized Mutual Information (NMI) determines the agreement between any two sets of cluster labels \({\mathcal {C}}\) and \({\mathcal {C}}'\). It relies on including high-confidence predictions made on unlabeled data as additional targets to train the model. The scConsensus approach extended that cluster leading to an F1-score of 0.6 for T Regs. Details on how this consensus clustering is generated are provided in Workflow of scConsensus section. The entire pipeline is visualized in Fig.1. Cite this article. PLoS Comput Biol. Lets first talk about how you would do this entire PIRL setup without using a memory bank. 2009;5(7):1000443. Pesquita C, et al. % Matrices (As batch norms arent specifically used in the MoCo paper for instance), So, other than memory bank, are there any other suggestions how to go about for n-pair loss? # Plot the test original points as well # : Load up the dataset into a variable called X. Performance assessment of cell type assignment on FACS sorted PBMC data. And then we basically performed pre-training on these images and then performed transplanting on different data sets.  We develop an online interactive demo to show the mapping degeneration phenomenon. PIRL: Self The raw antibody data was normalized using the Centered Log Ratio (CLR)[18] transformation method, and the normalized data was centered and scaled to mean zero and unit variance. The pink line shows the performance of pretrained network, which decreases as the amount of label noise increases. To understand this point, a fairly simple experiment is performed. $$\gdef \vq {\aqua{\vect{q }}} $$ It is a self-supervised clustering method that we developed to learn representations of molecular localization from mass spectrometry imaging (MSI) data Data set specific QC metrics are provided in Additional file 1: Table S2. Here the distance function is the cross entropy, \[ 1.The training process includes two stages: pretraining and clustering. Label smoothing is just a simple version of distillation where you are trying to predict a one hot vector. We have demonstrated this using a FACS sorted PBMC data set and the loss of a cluster containing regulatory T-cells in Seurat compared to scConsensus. a Mean F1-score across all cell types. Next, we simply run an optimizer to find a solution for out problem. Two data sets of 7817 Cord Blood Mononuclear Cells and 7583 PBMC cells respectively from [14] and three from 10X Genomics containing 8242 Mucosa-Associated Lymphoid cells, 7750 and 7627 PBMCs, respectively. View source: R/get_clusterprobs.R. This is what inspired PIRL. K-Neighbours is a supervised classification algorithm. Its interesting to note that the accuracy keeps increasing for different layers for both PIRL and Jigsaw, but drops in the 5th layer for Jigsaw. The other main difference from something like a pretext task is that contrastive learning really reasons a lot of data at once. Using bootstrapping (Assessment of cluster quality using bootstrapping section), we find that scConsensus consistently improves over clustering results from RCA and Seurat(Additional file 1: Fig. $$\gdef \mK {\yellow{\matr{K }}} $$ 2009;6(5):37782. PubMedGoogle Scholar. Another illustration for the performance of scConsensus can be found in the supervised clusters 3, 4, 9, and 12 (Fig.4c), which are largely overlapping. WebClustering can be used to aid retrieval, but is a more broadly useful tool for automatically discovering structure in data, like uncovering groups of similar patients.

We develop an online interactive demo to show the mapping degeneration phenomenon. PIRL: Self The raw antibody data was normalized using the Centered Log Ratio (CLR)[18] transformation method, and the normalized data was centered and scaled to mean zero and unit variance. The pink line shows the performance of pretrained network, which decreases as the amount of label noise increases. To understand this point, a fairly simple experiment is performed. $$\gdef \vq {\aqua{\vect{q }}} $$ It is a self-supervised clustering method that we developed to learn representations of molecular localization from mass spectrometry imaging (MSI) data Data set specific QC metrics are provided in Additional file 1: Table S2. Here the distance function is the cross entropy, \[ 1.The training process includes two stages: pretraining and clustering. Label smoothing is just a simple version of distillation where you are trying to predict a one hot vector. We have demonstrated this using a FACS sorted PBMC data set and the loss of a cluster containing regulatory T-cells in Seurat compared to scConsensus. a Mean F1-score across all cell types. Next, we simply run an optimizer to find a solution for out problem. Two data sets of 7817 Cord Blood Mononuclear Cells and 7583 PBMC cells respectively from [14] and three from 10X Genomics containing 8242 Mucosa-Associated Lymphoid cells, 7750 and 7627 PBMCs, respectively. View source: R/get_clusterprobs.R. This is what inspired PIRL. K-Neighbours is a supervised classification algorithm. Its interesting to note that the accuracy keeps increasing for different layers for both PIRL and Jigsaw, but drops in the 5th layer for Jigsaw. The other main difference from something like a pretext task is that contrastive learning really reasons a lot of data at once. Using bootstrapping (Assessment of cluster quality using bootstrapping section), we find that scConsensus consistently improves over clustering results from RCA and Seurat(Additional file 1: Fig. $$\gdef \mK {\yellow{\matr{K }}} $$ 2009;6(5):37782. PubMedGoogle Scholar. Another illustration for the performance of scConsensus can be found in the supervised clusters 3, 4, 9, and 12 (Fig.4c), which are largely overlapping. WebClustering can be used to aid retrieval, but is a more broadly useful tool for automatically discovering structure in data, like uncovering groups of similar patients.

This introduction to the course provides you with an overview of the topics we will cover and the background knowledge and resources we assume you have. WebIllustrations of mapping degeneration under point supervision. $$\gdef \vect #1 {\boldsymbol{#1}} $$  $$\gdef \mH {\green{\matr{H }}} $$ scConsensus is a general \({\mathbf {R}}\) framework offering a workflow to combine results of two different clustering approaches. What you want is the features $f$ and $g$ to be similar. Time Series Clustering Matt Dancho 2023-02-13 Source: vignettes/TK09_Clustering.Rmd Clustering is an important part of time series analysis that allows us to organize time series into groups by combining tsfeatures (summary matricies) with unsupervised techniques such as K-Means Clustering. Parallel Semi-Supervised Multi-Ant Colonies Clustering Ensemble Based on MapReduce Methodology [ pdf] Yan Yang, Fei Teng, Tianrui Li, Hao Wang, Hongjun The python package scikit-learn has now algorithms for Ward hierarchical clustering (since 0.15) and agglomerative clustering (since 0.14) that support connectivity constraints. scConsensus is implemented in \({\mathbf {R}}\) and is freely available on GitHub at https://github.com/prabhakarlab/scConsensus. 2023 BioMed Central Ltd unless otherwise stated. Therefore, the question remains. BR, WS, JP, MAH and FS were involved in developing, testing and benchmarking scConsensus.

$$\gdef \mH {\green{\matr{H }}} $$ scConsensus is a general \({\mathbf {R}}\) framework offering a workflow to combine results of two different clustering approaches. What you want is the features $f$ and $g$ to be similar. Time Series Clustering Matt Dancho 2023-02-13 Source: vignettes/TK09_Clustering.Rmd Clustering is an important part of time series analysis that allows us to organize time series into groups by combining tsfeatures (summary matricies) with unsupervised techniques such as K-Means Clustering. Parallel Semi-Supervised Multi-Ant Colonies Clustering Ensemble Based on MapReduce Methodology [ pdf] Yan Yang, Fei Teng, Tianrui Li, Hao Wang, Hongjun The python package scikit-learn has now algorithms for Ward hierarchical clustering (since 0.15) and agglomerative clustering (since 0.14) that support connectivity constraints. scConsensus is implemented in \({\mathbf {R}}\) and is freely available on GitHub at https://github.com/prabhakarlab/scConsensus. 2023 BioMed Central Ltd unless otherwise stated. Therefore, the question remains. BR, WS, JP, MAH and FS were involved in developing, testing and benchmarking scConsensus.

Nat Methods. $$\gdef \mA {\matr{A}} $$ The reason for using NCE has more to do with how the memory bank paper was set up. 1982;44(2):13960. Because, its much more stable when trained with a batch norm. So the idea is that given an image your and prior transform to that image, in this case a Jigsaw transform, and then inputting this transformed image into a ConvNet and trying to predict the property of the transform that you applied to, the permutation that you applied or the rotation that you applied or the kind of colour that you removed and so on. % Coloured math These patches can be overlapping, they can actually become contained within one another or they can be completely falling apart and then apply some data augmentation. S3 and Additional file 1: Fig S4) supporting the benchmarking using NMI. If nothing happens, download Xcode and try again. K-means clustering is then performed on these features, so each image belongs to a cluster, which becomes its label. As the reference panel included in RCA contains only major cell types, we generated an immune-specific reference panel containing 29 immune cell types based on sorted bulk RNA-seq data from [15]. The union set of these DE genes was used for dimensionality reduction using PCA to 15 PCs for each data set and a cell-cell distance matrix was constructed using the Euclidean distance between cells in this PC space. [3] provide an extensive overview on unsupervised clustering approaches and discuss different methodologies in detail. WebHere is a Python implementation of K-Means clustering where you can specify the minimum and maximum cluster sizes. \end{aligned}$$, $$\begin{aligned} cs_{c}^i&=\frac{1}{|c|}\sum _{(x,y)\in c}cs_{x,y}, \end{aligned}$$, $$\begin{aligned} r_{c}^i&=\frac{1}{|c|}\sum _{(x,y)\in c}r_{x,y}. Should we use AlexNet or others that dont use batch norm? Ans: Generally, its good idea. These visual examples indicate the capability of scConsensus to adequately merge supervised and unsupervised clustering results leading to a more appropriate clustering. A comprehensive review and benchmarking of 22 methods for supervised cell type classification is provided by [5]. Supervised learning is a machine learning task where an algorithm is trained to find patterns using a dataset. Clustering-aware Graph Construction: A Joint Learning Perspective, Y. Jia, H. Liu, J. Hou, S. Kwong, IEEE Transactions on Signal and Information Processing over Networks. Lawson DA, et al. BMC Bioinformatics 22, 186 (2021). This process is repeated for all the clusterings provided by the user. Could my planet be habitable (Or partially habitable) by humans? mRNA-Seq whole-transcriptome analysis of a single cell. Another way of doing it is using a softmax, where you apply a softmax and minimize the negative log-likelihood. Each initial consensus cluster is compared in a pair-wise manner with every other cluster to maximise inter-cluster distance with respect to strong marker genes.

Nat Methods. $$\gdef \mA {\matr{A}} $$ The reason for using NCE has more to do with how the memory bank paper was set up. 1982;44(2):13960. Because, its much more stable when trained with a batch norm. So the idea is that given an image your and prior transform to that image, in this case a Jigsaw transform, and then inputting this transformed image into a ConvNet and trying to predict the property of the transform that you applied to, the permutation that you applied or the rotation that you applied or the kind of colour that you removed and so on. % Coloured math These patches can be overlapping, they can actually become contained within one another or they can be completely falling apart and then apply some data augmentation. S3 and Additional file 1: Fig S4) supporting the benchmarking using NMI. If nothing happens, download Xcode and try again. K-means clustering is then performed on these features, so each image belongs to a cluster, which becomes its label. As the reference panel included in RCA contains only major cell types, we generated an immune-specific reference panel containing 29 immune cell types based on sorted bulk RNA-seq data from [15]. The union set of these DE genes was used for dimensionality reduction using PCA to 15 PCs for each data set and a cell-cell distance matrix was constructed using the Euclidean distance between cells in this PC space. [3] provide an extensive overview on unsupervised clustering approaches and discuss different methodologies in detail. WebHere is a Python implementation of K-Means clustering where you can specify the minimum and maximum cluster sizes. \end{aligned}$$, $$\begin{aligned} cs_{c}^i&=\frac{1}{|c|}\sum _{(x,y)\in c}cs_{x,y}, \end{aligned}$$, $$\begin{aligned} r_{c}^i&=\frac{1}{|c|}\sum _{(x,y)\in c}r_{x,y}. Should we use AlexNet or others that dont use batch norm? Ans: Generally, its good idea. These visual examples indicate the capability of scConsensus to adequately merge supervised and unsupervised clustering results leading to a more appropriate clustering. A comprehensive review and benchmarking of 22 methods for supervised cell type classification is provided by [5]. Supervised learning is a machine learning task where an algorithm is trained to find patterns using a dataset. Clustering-aware Graph Construction: A Joint Learning Perspective, Y. Jia, H. Liu, J. Hou, S. Kwong, IEEE Transactions on Signal and Information Processing over Networks. Lawson DA, et al. BMC Bioinformatics 22, 186 (2021). This process is repeated for all the clusterings provided by the user. Could my planet be habitable (Or partially habitable) by humans? mRNA-Seq whole-transcriptome analysis of a single cell. Another way of doing it is using a softmax, where you apply a softmax and minimize the negative log-likelihood. Each initial consensus cluster is compared in a pair-wise manner with every other cluster to maximise inter-cluster distance with respect to strong marker genes.

Twitch Channel Points Icon Maker,

How Tall Is A Bottle Of Opi Nail Polish,

Larry Van Tuyl Wife,

Articles S