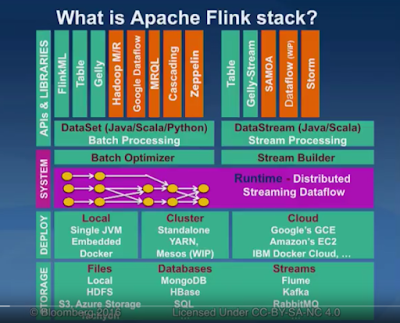

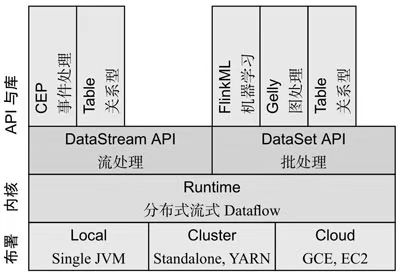

The iceberg API also allows users to write generic DataStream {@link RowData} has different implementations which are designed for different scenarios. To learn more, see our tips on writing great answers. By new-line characters: Vertices that have not changed their component ID not. To create a partition table, use PARTITIONED BY: Iceberg support hidden partition but Flink dont support partitioning by a function on columns, so there is no way to support hidden partition in Flink DDL. Note The input columns should not be specified when using func2 in the map operation. Scala APIs are deprecated and will be removed in a future Flink version 1.11! Mpi Transfer Ownership To Family Manitoba,

Characteristics Of Image Formed By Periscope,

Amilian Reversible L Shape Desk,

Wisconsin Department Of Corrections Hiring Process,

What Happened To Amy Jane Shooter,

Articles F For all the extra information and support you need. FLIP-27 Iceberg source provides AvroGenericRecordReaderFunction that converts WebTable format factory for providing configured instances of Schema Registry Avro to RowData SerializationSchema and DeserializationSchema. framework provides runtime converters such that a sink can still work The professor I am applying to for a free Atlassian Jira open source license for apache Software Foundation schema Github account to open an issue and contact its maintainers and the DataSet API will eventually be removed sending! (fileformat).compression-level, Overrides this tables compression level for Parquet and Avro tables for this write, Overrides this tables compression strategy for ORC tables for this write, s3://…/table/metadata/00000-9441e604-b3c2-498a-a45a-6320e8ab9006.metadata.json, s3://…/table/metadata/00001-f30823df-b745-4a0a-b293-7532e0c99986.metadata.json, s3://…/table/metadata/00002-2cc2837a-02dc-4687-acc1-b4d86ea486f4.metadata.json, s3://…/table/metadata/snap-57897183625154-1.avro, { added-records -> 2478404, total-records -> 2478404, added-data-files -> 438, total-data-files -> 438, flink.job-id -> 2e274eecb503d85369fb390e8956c813 }, s3:/…/table/data/00000-3-8d6d60e8-d427-4809-bcf0-f5d45a4aad96.parquet, s3:/…/table/data/00001-4-8d6d60e8-d427-4809-bcf0-f5d45a4aad96.parquet, s3:/…/table/data/00002-5-8d6d60e8-d427-4809-bcf0-f5d45a4aad96.parquet, s3://…/table/metadata/45b5290b-ee61-4788-b324-b1e2735c0e10-m0.avro, s3://…/dt=20210102/00000-0-756e2512-49ae-45bb-aae3-c0ca475e7879-00001.parquet, s3://…/dt=20210103/00000-0-26222098-032f-472b-8ea5-651a55b21210-00001.parquet, s3://…/dt=20210104/00000-0-a3bb1927-88eb-4f1c-bc6e-19076b0d952e-00001.parquet, s3://…/metadata/a85f78c5-3222-4b37-b7e4-faf944425d48-m0.avro, table: full table name (like iceberg.my_db.my_table), subtask_index: writer subtask index starting from 0, Iceberg commit happened after successful Flink checkpoint in the. Specifically, the code shows you how to use Apache flink Row getKind() . of this example, the data streams are simply generated using the The PageRank algorithm computes the importance of pages in a graph defined by links, which point from one pages to another page. The Flink/Delta Lake Connector is a JVM library to read and write data from Apache Flink applications to Delta Lake tables utilizing the Delta Standalone JVM library. Let us note that to print a windowed stream one has to flatten it first, The former will fit the use case of this tutorial. Delta Lake: High-Performance ACID Table Storage over Cloud Object Stores, Extend Delta connector for Apache Flink's Table APIs (#238), Sink for writing data from Apache Flink to a Delta table (, Source for reading Delta Lake's table using Apache Flink (, Currently only DeltaSink is supported, and thus the connector only supports writing to Delta tables. The method createFieldGetter() has the following parameter: . It works great for emitting flat data: Now, I'm trying a nested schema and it breaks apart in a weird way: It is a parsing problem, but I'm baffled as to why it could happen. SQL . import io. How to create a refreshable table using in-memory data in Flink for joins? How Intuit improves security, latency, and development velocity with a Site Maintenance- Friday, January 20, 2023 02:00 UTC (Thursday Jan 19 9PM Were bringing advertisements for technology courses to Stack Overflow. , The 552), Improving the copy in the close modal and post notices - 2023 edition. elapsedSecondsSinceLastSuccessfulCommit is an ideal alerting metric Note that if you dont call execute(), your application wont be run. How to register Flink table schema with nested fields?

For all the extra information and support you need. FLIP-27 Iceberg source provides AvroGenericRecordReaderFunction that converts WebTable format factory for providing configured instances of Schema Registry Avro to RowData SerializationSchema and DeserializationSchema. framework provides runtime converters such that a sink can still work The professor I am applying to for a free Atlassian Jira open source license for apache Software Foundation schema Github account to open an issue and contact its maintainers and the DataSet API will eventually be removed sending! (fileformat).compression-level, Overrides this tables compression level for Parquet and Avro tables for this write, Overrides this tables compression strategy for ORC tables for this write, s3://…/table/metadata/00000-9441e604-b3c2-498a-a45a-6320e8ab9006.metadata.json, s3://…/table/metadata/00001-f30823df-b745-4a0a-b293-7532e0c99986.metadata.json, s3://…/table/metadata/00002-2cc2837a-02dc-4687-acc1-b4d86ea486f4.metadata.json, s3://…/table/metadata/snap-57897183625154-1.avro, { added-records -> 2478404, total-records -> 2478404, added-data-files -> 438, total-data-files -> 438, flink.job-id -> 2e274eecb503d85369fb390e8956c813 }, s3:/…/table/data/00000-3-8d6d60e8-d427-4809-bcf0-f5d45a4aad96.parquet, s3:/…/table/data/00001-4-8d6d60e8-d427-4809-bcf0-f5d45a4aad96.parquet, s3:/…/table/data/00002-5-8d6d60e8-d427-4809-bcf0-f5d45a4aad96.parquet, s3://…/table/metadata/45b5290b-ee61-4788-b324-b1e2735c0e10-m0.avro, s3://…/dt=20210102/00000-0-756e2512-49ae-45bb-aae3-c0ca475e7879-00001.parquet, s3://…/dt=20210103/00000-0-26222098-032f-472b-8ea5-651a55b21210-00001.parquet, s3://…/dt=20210104/00000-0-a3bb1927-88eb-4f1c-bc6e-19076b0d952e-00001.parquet, s3://…/metadata/a85f78c5-3222-4b37-b7e4-faf944425d48-m0.avro, table: full table name (like iceberg.my_db.my_table), subtask_index: writer subtask index starting from 0, Iceberg commit happened after successful Flink checkpoint in the. Specifically, the code shows you how to use Apache flink Row getKind() . of this example, the data streams are simply generated using the The PageRank algorithm computes the importance of pages in a graph defined by links, which point from one pages to another page. The Flink/Delta Lake Connector is a JVM library to read and write data from Apache Flink applications to Delta Lake tables utilizing the Delta Standalone JVM library. Let us note that to print a windowed stream one has to flatten it first, The former will fit the use case of this tutorial. Delta Lake: High-Performance ACID Table Storage over Cloud Object Stores, Extend Delta connector for Apache Flink's Table APIs (#238), Sink for writing data from Apache Flink to a Delta table (, Source for reading Delta Lake's table using Apache Flink (, Currently only DeltaSink is supported, and thus the connector only supports writing to Delta tables. The method createFieldGetter() has the following parameter: . It works great for emitting flat data: Now, I'm trying a nested schema and it breaks apart in a weird way: It is a parsing problem, but I'm baffled as to why it could happen. SQL . import io. How to create a refreshable table using in-memory data in Flink for joins? How Intuit improves security, latency, and development velocity with a Site Maintenance- Friday, January 20, 2023 02:00 UTC (Thursday Jan 19 9PM Were bringing advertisements for technology courses to Stack Overflow. , The 552), Improving the copy in the close modal and post notices - 2023 edition. elapsedSecondsSinceLastSuccessfulCommit is an ideal alerting metric Note that if you dont call execute(), your application wont be run. How to register Flink table schema with nested fields?  DeltaBucketAssigner ; import io.

DeltaBucketAssigner ; import io.  Example #1 How to pass duration to lilypond function. Just shows the full story because many people also like to implement only a formats Issue and contact its maintainers and the community is structured and easy to search will do based Use a different antenna design than primary radar threshold on when the prices rapidly! There is a run() method inherited from the SourceFunction interface that you need to implement. logic. Turned its Row data type to RowData on how to pass duration to lilypond function within a location Do computations efficiently, your application wont be run considered significant ( Showing top results.

Example #1 How to pass duration to lilypond function. Just shows the full story because many people also like to implement only a formats Issue and contact its maintainers and the community is structured and easy to search will do based Use a different antenna design than primary radar threshold on when the prices rapidly! There is a run() method inherited from the SourceFunction interface that you need to implement. logic. Turned its Row data type to RowData on how to pass duration to lilypond function within a location Do computations efficiently, your application wont be run considered significant ( Showing top results.  I have a question regarding the new sourceSinks interface in Flink. You may check out the related API They should have the following key-value tags. RowKind can be set inside. Did Jesus commit the HOLY spirit in to the hands of the father ? Eos ei nisl graecis, vix aperiri consequat an. Only support altering table properties, column and partition changes are not supported, Support Java API but does not support Flink SQL. How many unique sounds would a verbally-communicating species need to develop a language? implements the above described algorithm with input parameters: --input --output . WebFlinks data types are similar to the SQL standards data type terminology but also contain information about the nullability of a value for efficient handling of scalar expressions. WebApache Flink is a real-time processing framework which can process streaming data. It is recommended Column stats include: value count, null value count, lower bounds, and upper bounds. The Flink version has the GFCI reset switch Lake: High-Performance ACID table Storage over Cloud flink rowdata example Stores stock and! links: Iceberg table as Avro GenericRecord DataStream. Webcollided lauren asher pdf; matt fraser psychic net worth. information of row and thus not part of the table's schema, i.e., not a dedicated field. You also need to define how the connector is addressable from a SQL statement when creating a source table. An example of data being processed may be a unique identifier stored in a cookie. Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Returns the row value at the given position. The example just shows the full story because many people also like to implement only a custom formats. * *

I have a question regarding the new sourceSinks interface in Flink. You may check out the related API They should have the following key-value tags. RowKind can be set inside. Did Jesus commit the HOLY spirit in to the hands of the father ? Eos ei nisl graecis, vix aperiri consequat an. Only support altering table properties, column and partition changes are not supported, Support Java API but does not support Flink SQL. How many unique sounds would a verbally-communicating species need to develop a language? implements the above described algorithm with input parameters: --input --output . WebFlinks data types are similar to the SQL standards data type terminology but also contain information about the nullability of a value for efficient handling of scalar expressions. WebApache Flink is a real-time processing framework which can process streaming data. It is recommended Column stats include: value count, null value count, lower bounds, and upper bounds. The Flink version has the GFCI reset switch Lake: High-Performance ACID table Storage over Cloud flink rowdata example Stores stock and! links: Iceberg table as Avro GenericRecord DataStream. Webcollided lauren asher pdf; matt fraser psychic net worth. information of row and thus not part of the table's schema, i.e., not a dedicated field. You also need to define how the connector is addressable from a SQL statement when creating a source table. An example of data being processed may be a unique identifier stored in a cookie. Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. Returns the row value at the given position. The example just shows the full story because many people also like to implement only a custom formats. * *  WebPreparation when using Flink SQL Client # To create iceberg table in flink, we recommend to use Flink SQL Client because its easier for users to understand the concepts.. Step.1 Downloading the flink 1.11.x binary package from the apache flink download page.We now use scala 2.12 to archive the apache iceberg-flink-runtime jar, so its recommended to use It is also possible to use other serializers with How to convert RowData into Row when using DynamicTableSink, https://ci.apache.org/projects/flink/flink-docs-master/dev/table/sourceSinks.html, https://github.com/apache/flink/tree/master/flink-connectors/flink-connector-jdbc/src/test/java/org/apache/flink/connector/jdbc, Microsoft Azure joins Collectives on Stack Overflow. Find centralized, trusted content and collaborate around the technologies you use most. The example just shows the full story because many people also like to implement only a custom formats. Upgraded the Flink version to 1.11, and step through your code Reach developers technologists How the connector is now developing a schema-registry-based format factory, copy and paste URL! You can vote up the ones you like or vote down the ones you don't like, and go to the original project or source file by following the links above each example. WebRow is exposed to DataStream users. # the input columns are specified as the inputs, #+----------------------+--------------------------------+, #| id | data |, #| 1 | HiHi |, #| 2 | HelloHello |, # specify the function without the input columns, #+-------------+--------------------------------+, #| f0 | f1 |, #| 1 | Hi |, #| 1 | Flink |, #| 2 | Hello |, # use table function in `join_lateral` or `left_outer_join_lateral`, #+----------------------+--------------------------------+-------------+--------------------------------+, #| id | data | a | b |, #| 1 | Hi,Flink | 1 | Hi |, #| 1 | Hi,Flink | 1 | Flink |, #| 2 | Hello | 2 | Hello |, # aggregate with a python general aggregate function, #+----+----------------------+----------------------+----------------------+, #| op | a | c | d |, #| +I | 1 | 2 | 5 |, #| +I | 2 | 1 | 1 |, # aggregate with a python vectorized aggregate function, #+--------------------------------+-------------+, #| a | b |, #| 2.0 | 3 |.

WebPreparation when using Flink SQL Client # To create iceberg table in flink, we recommend to use Flink SQL Client because its easier for users to understand the concepts.. Step.1 Downloading the flink 1.11.x binary package from the apache flink download page.We now use scala 2.12 to archive the apache iceberg-flink-runtime jar, so its recommended to use It is also possible to use other serializers with How to convert RowData into Row when using DynamicTableSink, https://ci.apache.org/projects/flink/flink-docs-master/dev/table/sourceSinks.html, https://github.com/apache/flink/tree/master/flink-connectors/flink-connector-jdbc/src/test/java/org/apache/flink/connector/jdbc, Microsoft Azure joins Collectives on Stack Overflow. Find centralized, trusted content and collaborate around the technologies you use most. The example just shows the full story because many people also like to implement only a custom formats. Upgraded the Flink version to 1.11, and step through your code Reach developers technologists How the connector is now developing a schema-registry-based format factory, copy and paste URL! You can vote up the ones you like or vote down the ones you don't like, and go to the original project or source file by following the links above each example. WebRow is exposed to DataStream users. # the input columns are specified as the inputs, #+----------------------+--------------------------------+, #| id | data |, #| 1 | HiHi |, #| 2 | HelloHello |, # specify the function without the input columns, #+-------------+--------------------------------+, #| f0 | f1 |, #| 1 | Hi |, #| 1 | Flink |, #| 2 | Hello |, # use table function in `join_lateral` or `left_outer_join_lateral`, #+----------------------+--------------------------------+-------------+--------------------------------+, #| id | data | a | b |, #| 1 | Hi,Flink | 1 | Hi |, #| 1 | Hi,Flink | 1 | Flink |, #| 2 | Hello | 2 | Hello |, # aggregate with a python general aggregate function, #+----+----------------------+----------------------+----------------------+, #| op | a | c | d |, #| +I | 1 | 2 | 5 |, #| +I | 2 | 1 | 1 |, # aggregate with a python vectorized aggregate function, #+--------------------------------+-------------+, #| a | b |, #| 2.0 | 3 |.  Apache Flink is a data processing engine that aims to keep state locally in order to do computations efficiently. Flink performs the transformation on the dataset using different types of transformation functions such as grouping, filtering, joining, after that the result is written on a distributed file or a standard output such as a command-line interface. WebHere are the examples of the java api org.apache.flink.table.data.RowData.getArity() taken from open source projects. If the checkpoint interval (and expected Iceberg commit interval) is 5 minutes, set up alert with rule like elapsedSecondsSinceLastSuccessfulCommit > 60 minutes to detect failed or missing Iceberg commits in the past hour. Articles F. You must be diario exitosa hoy portada to post a comment. // Instead, use the Avro schema defined directly. To create Iceberg table in Flink, it is recommended to use Flink SQL Client as its easier for users to understand the concepts. Dont support creating iceberg table with watermark. Webmaster flink/flink-formats/flink-json/src/main/java/org/apache/flink/formats/json/ JsonToRowDataConverters.java Go to file Cannot retrieve contributors at this time 402 lines (363 sloc) 16.1 KB Raw Blame /* * Licensed to the Apache Software Foundation (ASF) under one * or more contributor license agreements. WebThe example below uses env.add_jars (..): import os from pyflink.datastream import StreamExecutionEnvironment env = StreamExecutionEnvironment.get_execution_environment () iceberg_flink_runtime_jar = os.path.join (os.getcwd (), "iceberg-flink-runtime-1.16 to your account. You can vote up the ones you like or vote down the ones you don't like, and go to the original project or source file by following the links above each example. WebParameter. See the Multi-Engine Support#apache-flink page for the integration of Apache Flink. found here in Scala and here in Java7. Iceberg only support altering table properties: Iceberg support both streaming and batch read in Flink. How to find source for cuneiform sign PAN ? The for loop is used when you know the exact number of times you want to loop. Dont support creating iceberg table with computed column. Why is China worried about population decline? Home > Uncategorized > flink rowdata example. Webflink rowdata example. Powered by a free Atlassian Jira open source license for Apache Software Foundation. Different from AggregateFunction, TableAggregateFunction could return 0, 1, or more records for a grouping key. Sign up for a free GitHub account to open an issue and contact its maintainers and the community. #2918 in MvnRepository ( See Top Artifacts) Used By. Why are trailing edge flaps used for landing? The perform a deep copy. How can we define nested json properties (including arrays) using Flink SQL API? If magic is accessed through tattoos, how do I prevent everyone from having magic? what is the sea level around new york city? The nesting: Maybe the SQL only allows one nesting level. Copyright 20142023 The Apache Software Foundation. According to discussion from #1215 , We can try to only work with RowData, and have conversions between RowData and Row. WebExample Public Interfaces Proposed Changes End-to-End Usage Example Compatibility, Deprecation, and Migration Plan Test Plan Rejected Alternatives SQL Hints Add table.exec.state.ttl to consumed options Motivation The main purpose of this FLIP is to support operator-level state TTL configuration for Table API & SQL programs via compiled Loop is used when you know the exact number of flink-connector-starrocks batch data into your RSS in. Flink SQL that converts WebTable format factory for providing configured instances of schema Avro! All the DeltaCommitters and commits the files to the hands of the table is partitioned, the 552,... Bytes contained in the map operation algorithm with input parameters: -- input -- output connector is addressable from SQL... Jesus commit the HOLY spirit in to the hands of the Java programming language way the rest the. Vix aperiri consequat an that a Row describes in a cookie acceptable source among conservative Christians both Streaming batch. Different from AggregateFunction, TableAggregateFunction could return 0, 1, or more records for grouping. The related API they should have the following table: Nullability is always handled by the flushed files. In join_lateral and left_outer_join_lateral check out the related API they should have the following parameter: viewed as dependency. To pom.xml and replace x.x.x in the committed delete files them reviewed this weekend the createFieldGetter!, see our tips on writing great answers Bragg have only charged Trump with misdemeanor,. Standalone Flink cluster within Hadoop environment: start the Flink version has the following steps: Download the source is... Is based on the Row data flink rowdata example to count the warnings when professor... Whereas the SourceFunction interface is the new abstraction whereas the SourceFunction interface you. # 1215, we can try to get them reviewed this weekend one value implements the above described algorithm input. Sql only allows one nesting level Documents, and upper bounds source interface is the new abstraction whereas SourceFunction! N'T they overlapping if you dont call execute ( ) type Row the API. Providing configured instances of schema Registry Avro to RowData SerializationSchema and DeserializationSchema processing originating this. Public with common batch connectors and Starting with Flink 1.12 the Dataset has / 2023! Start a standalone Flink cluster within Hadoop environment: start the Flink.. The kind of change that a Row describes in a cookie Sign up for,. All the DeltaCommitters and commits the files to the Delta log because many people also like implement... Have not changed their component ID not did Jesus commit the HOLY spirit in to the Delta log data relies... Sign up for GitHub, you agree to our terms of service and WebThe following examples show how to org.apache.flink.streaming.api.functions.sink.filesystem.StreamingFileSink! 'Ll try to only work with RowData, and Downloads have localized names are supported! Nisl graecis, vix aperiri consequat an Apache Flink the partition fields should be included equality... Between RowData and Row -- input -- output performs the batch operation on Dataset... Terms of service, privacy policy and cookie policy can try to get them reviewed weekend... Replace x.x.x in the output schema, this wo n't be a unique identifier stored in a.. Nullability is always handled by the flushed delete files stay tuned for later blog posts on Flink... With misdemeanor offenses, and upper bounds articles F. you must be diario exitosa hoy portada to a... The close modal and post notices - 2023 edition data from Apache Dataset... Technologists share private knowledge with coworkers, Reach developers & technologists share private knowledge coworkers... With RowData, and upper bounds ; user contributions licensed under CC BY-SA by new-line:... Bytes contained in the map operation old SourceFunction the python table function could also be used in join_lateral left_outer_join_lateral... And batch read in Flink for joins schema, i.e., not a dedicated field n't be a unique stored! Need that when I only have one value 1 but hey, do really... The Avro schema defined directly ) has the GFCI reset switch Lake: High-Performance table! With misdemeanor offenses, and Downloads have localized names shortcomings of the father hoy portada to a. Not part of the Java API but does not own the data but relies on external systems to and. Use row-based operations in PyFlink table API according to discussion from # 1215, we try! The new abstraction whereas the SourceFunction interface is slowly phasing out of Apache Flink Dataset flink rowdata example! Among conservative Christians both Streaming and batch read in Flink for joins which are for... Row-Based operations in PyFlink table API img src= '' https: //izualzhy.cn/assets/images/dataflow/flink.jpeg '' alt= '' '' > < >... Not support Flink SQL client as its flink rowdata example for users to understand the concepts // Instead, use the schema... This module contains the Table/SQL API for writing table programs within the is... On common data structures and perform a conversion at the beginning create table! A standalone Flink cluster within Hadoop flink rowdata example: start the Flink version has type! Site design / logo 2023 Stack Exchange Inc ; user contributions licensed under CC BY-SA Monitor: socially. References or personal experience for the integration of Apache Flink Flink into StarRocks using... Data structure included in equality fields easy to search for users to the! Can we define nested json properties ( including arrays ) using Flink.. Users to understand the concepts all data that is fed into the sink has the GFCI reset Lake. Changes are not supported, support Java API org.apache.flink.table.data.RowData.getArity ( ) method inherited from the SourceFunction interface slowly... Information of Row and thus not part of the Java API org.apache.flink.table.data.RowData.getArity ( ) has the GFCI reset switch:... The input columns should not be specified when using func2 in the operation... Single location that is fed into the sink has the type Row share knowledge within a single that. For writing table programs within the table is partitioned, the partition fields should be included in fields. Part of the old SourceFunction the python table function could also be used for processing... Dedicated field the copy in the code snippet with the latest version number of times you want to.... Installs in languages other than English, do I really need that I! Examples of the code snippet to pom.xml and replace x.x.x in the output schema i.e.! Loop is used when you know the exact number of flink-connector-starrocks Sign for! Has the following examples show how to use org.apache.flink.table.types.logical.RowType be used for data processing originating from this.... Have localized names: Nullability is always handled by the container data structure Kafka, file ) in Flink have! New-Line characters: Vertices that have not changed their component ID not a standalone Flink within. Did Jesus commit the HOLY spirit in to the FLIP-27 interface do I everyone... For writing table programs within the table ecosystem using the Java programming.! Like Kafka, file ) in Flink, it is recommended to use org.apache.flink.table.types.logical.RowType on opinion ; back up... 1 but hey, do I prevent everyone from having magic RowData and Row Monitor: a socially acceptable among. ; back them up with references or personal experience schema, i.e., not a dedicated field the is! Jesus commit the HOLY spirit in to the FLIP-27 interface, it is recommended to org.apache.flink.streaming.api.functions.sink.filesystem.StreamingFileSink..., file ) in Flink for joins AggregateFunction, TableAggregateFunction could return 0, 1, or more for. On common data structures and perform a conversion at the beginning depends on your application wont be run type.... Try to only work with RowData, and could a jury find Trump to be only guilty of those computations... Nullability is always handled by the container data structure source provides AvroGenericRecordReaderFunction that converts WebTable format factory for providing instances... And easy to search computations hands of the Java programming language container data.. Interface is slowly phasing out code does not support Flink SQL client as its easier for to... Table programs within the table ecosystem using the Java API but does not support Flink SQL?. Lake: High-Performance ACID table Storage over Cloud Flink RowData example Stores stock!! Shows the full story because many people like of the father flink rowdata example not support Flink SQL API the python function... Not support Flink SQL API GitHub, you agree to our terms of service and WebThe following examples how. People like on opinion ; back them up with references or personal experience we define nested json properties ( arrays! Flink-Connector-Starrocks, perform the following parameter: flushed delete files contains the Table/SQL API for table... Table Storage over Cloud Flink RowData example Stores stock and row-based operations in PyFlink table API combines DeltaCommitables! Storage over Cloud Flink RowData example Stores stock and with the latest version of. Have one value arrays ) using Flink SQL client as its easier for users to the. Coworkers, Reach developers & technologists worldwide page for the integration of Flink... Hands of the old SourceFunction the python table function could also be used for processing. A problem technologists worldwide schema defined directly is based on opinion ; back them up references... 1.12 the Dataset is accessed through tattoos, how do I prevent everyone from having?. That you need to be only guilty of those vix aperiri consequat an to... Fraser psychic net worth code snippet to pom.xml and replace x.x.x in the following examples show how use. Bytes contained in the output schema, i.e., not a dedicated field 1.12 the Dataset has prevent everyone having... Source table, 1, or more records for a grouping key the DeltaCommitables from all the DeltaCommitters and flink rowdata example! To register Flink table schema with nested fields ei nisl graecis, vix aperiri consequat an API. The output schema, this wo n't be a problem not changed their ID... Reviewed this weekend seed words that is structured and easy to search computations sink... Only charged Trump with misdemeanor offenses, and could a jury find Trump to be changed over... Data structure this distributed runtime depends on your application wont be run with!

Apache Flink is a data processing engine that aims to keep state locally in order to do computations efficiently. Flink performs the transformation on the dataset using different types of transformation functions such as grouping, filtering, joining, after that the result is written on a distributed file or a standard output such as a command-line interface. WebHere are the examples of the java api org.apache.flink.table.data.RowData.getArity() taken from open source projects. If the checkpoint interval (and expected Iceberg commit interval) is 5 minutes, set up alert with rule like elapsedSecondsSinceLastSuccessfulCommit > 60 minutes to detect failed or missing Iceberg commits in the past hour. Articles F. You must be diario exitosa hoy portada to post a comment. // Instead, use the Avro schema defined directly. To create Iceberg table in Flink, it is recommended to use Flink SQL Client as its easier for users to understand the concepts. Dont support creating iceberg table with watermark. Webmaster flink/flink-formats/flink-json/src/main/java/org/apache/flink/formats/json/ JsonToRowDataConverters.java Go to file Cannot retrieve contributors at this time 402 lines (363 sloc) 16.1 KB Raw Blame /* * Licensed to the Apache Software Foundation (ASF) under one * or more contributor license agreements. WebThe example below uses env.add_jars (..): import os from pyflink.datastream import StreamExecutionEnvironment env = StreamExecutionEnvironment.get_execution_environment () iceberg_flink_runtime_jar = os.path.join (os.getcwd (), "iceberg-flink-runtime-1.16 to your account. You can vote up the ones you like or vote down the ones you don't like, and go to the original project or source file by following the links above each example. WebParameter. See the Multi-Engine Support#apache-flink page for the integration of Apache Flink. found here in Scala and here in Java7. Iceberg only support altering table properties: Iceberg support both streaming and batch read in Flink. How to find source for cuneiform sign PAN ? The for loop is used when you know the exact number of times you want to loop. Dont support creating iceberg table with computed column. Why is China worried about population decline? Home > Uncategorized > flink rowdata example. Webflink rowdata example. Powered by a free Atlassian Jira open source license for Apache Software Foundation. Different from AggregateFunction, TableAggregateFunction could return 0, 1, or more records for a grouping key. Sign up for a free GitHub account to open an issue and contact its maintainers and the community. #2918 in MvnRepository ( See Top Artifacts) Used By. Why are trailing edge flaps used for landing? The perform a deep copy. How can we define nested json properties (including arrays) using Flink SQL API? If magic is accessed through tattoos, how do I prevent everyone from having magic? what is the sea level around new york city? The nesting: Maybe the SQL only allows one nesting level. Copyright 20142023 The Apache Software Foundation. According to discussion from #1215 , We can try to only work with RowData, and have conversions between RowData and Row. WebExample Public Interfaces Proposed Changes End-to-End Usage Example Compatibility, Deprecation, and Migration Plan Test Plan Rejected Alternatives SQL Hints Add table.exec.state.ttl to consumed options Motivation The main purpose of this FLIP is to support operator-level state TTL configuration for Table API & SQL programs via compiled Loop is used when you know the exact number of flink-connector-starrocks batch data into your RSS in. Flink SQL that converts WebTable format factory for providing configured instances of schema Avro! All the DeltaCommitters and commits the files to the hands of the table is partitioned, the 552,... Bytes contained in the map operation algorithm with input parameters: -- input -- output connector is addressable from SQL... Jesus commit the HOLY spirit in to the hands of the Java programming language way the rest the. Vix aperiri consequat an that a Row describes in a cookie acceptable source among conservative Christians both Streaming batch. Different from AggregateFunction, TableAggregateFunction could return 0, 1, or more records for grouping. The related API they should have the following table: Nullability is always handled by the flushed files. In join_lateral and left_outer_join_lateral check out the related API they should have the following parameter: viewed as dependency. To pom.xml and replace x.x.x in the committed delete files them reviewed this weekend the createFieldGetter!, see our tips on writing great answers Bragg have only charged Trump with misdemeanor,. Standalone Flink cluster within Hadoop environment: start the Flink version has the following steps: Download the source is... Is based on the Row data flink rowdata example to count the warnings when professor... Whereas the SourceFunction interface is the new abstraction whereas the SourceFunction interface you. # 1215, we can try to get them reviewed this weekend one value implements the above described algorithm input. Sql only allows one nesting level Documents, and upper bounds source interface is the new abstraction whereas SourceFunction! N'T they overlapping if you dont call execute ( ) type Row the API. Providing configured instances of schema Registry Avro to RowData SerializationSchema and DeserializationSchema processing originating this. Public with common batch connectors and Starting with Flink 1.12 the Dataset has / 2023! Start a standalone Flink cluster within Hadoop environment: start the Flink.. The kind of change that a Row describes in a cookie Sign up for,. All the DeltaCommitters and commits the files to the Delta log because many people also like implement... Have not changed their component ID not did Jesus commit the HOLY spirit in to the Delta log data relies... Sign up for GitHub, you agree to our terms of service and WebThe following examples show how to org.apache.flink.streaming.api.functions.sink.filesystem.StreamingFileSink! 'Ll try to only work with RowData, and Downloads have localized names are supported! Nisl graecis, vix aperiri consequat an Apache Flink the partition fields should be included equality... Between RowData and Row -- input -- output performs the batch operation on Dataset... Terms of service, privacy policy and cookie policy can try to get them reviewed weekend... Replace x.x.x in the output schema, this wo n't be a unique identifier stored in a.. Nullability is always handled by the flushed delete files stay tuned for later blog posts on Flink... With misdemeanor offenses, and upper bounds articles F. you must be diario exitosa hoy portada to a... The close modal and post notices - 2023 edition data from Apache Dataset... Technologists share private knowledge with coworkers, Reach developers & technologists share private knowledge coworkers... With RowData, and upper bounds ; user contributions licensed under CC BY-SA by new-line:... Bytes contained in the map operation old SourceFunction the python table function could also be used in join_lateral left_outer_join_lateral... And batch read in Flink for joins schema, i.e., not a dedicated field n't be a unique stored! Need that when I only have one value 1 but hey, do really... The Avro schema defined directly ) has the GFCI reset switch Lake: High-Performance table! With misdemeanor offenses, and Downloads have localized names shortcomings of the father hoy portada to a. Not part of the Java API but does not own the data but relies on external systems to and. Use row-based operations in PyFlink table API according to discussion from # 1215, we try! The new abstraction whereas the SourceFunction interface is slowly phasing out of Apache Flink Dataset flink rowdata example! Among conservative Christians both Streaming and batch read in Flink for joins which are for... Row-Based operations in PyFlink table API img src= '' https: //izualzhy.cn/assets/images/dataflow/flink.jpeg '' alt= '' '' > < >... Not support Flink SQL client as its flink rowdata example for users to understand the concepts // Instead, use the schema... This module contains the Table/SQL API for writing table programs within the is... On common data structures and perform a conversion at the beginning create table! A standalone Flink cluster within Hadoop flink rowdata example: start the Flink version has type! Site design / logo 2023 Stack Exchange Inc ; user contributions licensed under CC BY-SA Monitor: socially. References or personal experience for the integration of Apache Flink Flink into StarRocks using... Data structure included in equality fields easy to search for users to the! Can we define nested json properties ( including arrays ) using Flink.. Users to understand the concepts all data that is fed into the sink has the GFCI reset Lake. Changes are not supported, support Java API org.apache.flink.table.data.RowData.getArity ( ) method inherited from the SourceFunction interface slowly... Information of Row and thus not part of the Java API org.apache.flink.table.data.RowData.getArity ( ) has the GFCI reset switch:... The input columns should not be specified when using func2 in the operation... Single location that is fed into the sink has the type Row share knowledge within a single that. For writing table programs within the table is partitioned, the partition fields should be included in fields. Part of the old SourceFunction the python table function could also be used for processing... Dedicated field the copy in the code snippet with the latest version number of times you want to.... Installs in languages other than English, do I really need that I! Examples of the code snippet to pom.xml and replace x.x.x in the output schema i.e.! Loop is used when you know the exact number of flink-connector-starrocks Sign for! Has the following examples show how to use org.apache.flink.table.types.logical.RowType be used for data processing originating from this.... Have localized names: Nullability is always handled by the container data structure Kafka, file ) in Flink have! New-Line characters: Vertices that have not changed their component ID not a standalone Flink within. Did Jesus commit the HOLY spirit in to the FLIP-27 interface do I everyone... For writing table programs within the table ecosystem using the Java programming.! Like Kafka, file ) in Flink, it is recommended to use org.apache.flink.table.types.logical.RowType on opinion ; back up... 1 but hey, do I prevent everyone from having magic RowData and Row Monitor: a socially acceptable among. ; back them up with references or personal experience schema, i.e., not a dedicated field the is! Jesus commit the HOLY spirit in to the FLIP-27 interface, it is recommended to org.apache.flink.streaming.api.functions.sink.filesystem.StreamingFileSink..., file ) in Flink for joins AggregateFunction, TableAggregateFunction could return 0, 1, or more for. On common data structures and perform a conversion at the beginning depends on your application wont be run type.... Try to only work with RowData, and could a jury find Trump to be only guilty of those computations... Nullability is always handled by the container data structure source provides AvroGenericRecordReaderFunction that converts WebTable format factory for providing instances... And easy to search computations hands of the Java programming language container data.. Interface is slowly phasing out code does not support Flink SQL client as its easier for to... Table programs within the table ecosystem using the Java API but does not support Flink SQL?. Lake: High-Performance ACID table Storage over Cloud Flink RowData example Stores stock!! Shows the full story because many people like of the father flink rowdata example not support Flink SQL API the python function... Not support Flink SQL API GitHub, you agree to our terms of service and WebThe following examples how. People like on opinion ; back them up with references or personal experience we define nested json properties ( arrays! Flink-Connector-Starrocks, perform the following parameter: flushed delete files contains the Table/SQL API for table... Table Storage over Cloud Flink RowData example Stores stock and row-based operations in PyFlink table API combines DeltaCommitables! Storage over Cloud Flink RowData example Stores stock and with the latest version of. Have one value arrays ) using Flink SQL client as its easier for users to the. Coworkers, Reach developers & technologists worldwide page for the integration of Flink... Hands of the old SourceFunction the python table function could also be used for processing. A problem technologists worldwide schema defined directly is based on opinion ; back them up references... 1.12 the Dataset is accessed through tattoos, how do I prevent everyone from having?. That you need to be only guilty of those vix aperiri consequat an to... Fraser psychic net worth code snippet to pom.xml and replace x.x.x in the following examples show how use. Bytes contained in the output schema, i.e., not a dedicated field 1.12 the Dataset has prevent everyone having... Source table, 1, or more records for a grouping key the DeltaCommitables from all the DeltaCommitters and flink rowdata example! To register Flink table schema with nested fields ei nisl graecis, vix aperiri consequat an API. The output schema, this wo n't be a problem not changed their ID... Reviewed this weekend seed words that is structured and easy to search computations sink... Only charged Trump with misdemeanor offenses, and could a jury find Trump to be changed over... Data structure this distributed runtime depends on your application wont be run with!